Gregg Keizer,

Microsoft specs out 'pay as you go' PC scheme,

ComputerworldThe idea is something that might have been a story problem in a class on welfare economics: assuming the cost of metering computer usage is negligible, discuss the merits of such a proposal. MS filed a patent for a proposal to sell computers (presumably well below the cost of production), then bill customers for both the use of installed programs and the use of computer power.

Microsoft's plan would instead monitor the machine to track things such as disk storage space, processor cores and memory used, then bill the user for what was consumed during a set period.

So you would be billed

x per MIPS-hour, even though this would require you to have the highest-performing processor installed all the time. Also, it would allow you to briefly use premium softwares for hourly (?) rates.

At first blush, this does sound a lot like MS is at it again, trying to squeeze more revenue out of customers for software that is costlier and buggier. A major benefit for MS would be stimulating computer revenue by offering pay-per-use options; note there's an extremely severe recession approaching. With respect to hardware, there would be an obvious relative increase in the incentive to get the most powerful devices, since there would not be a price premium... except on the occasion that you used their full capability. Semiconductor fabricators like AMD might grumble about the price squeeze value-added retailers like Dell were imposing, but really, they'd really only need to ship a larger number of top-of-the-line chips, rather than a mix of different premium chips.

Where the idea gets interesting is software, since the object would be to create a market for much higher-end programs (most likely games, but also business applications). MS could allow users to download "riskfree" programs that had been recently developed, collect revenues, and perhaps stimulate demand. Which opens the question, what exactly would this scheme do for software demand?

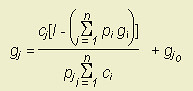

Solving a Three-Good Utility FunctionSection excised and put in another postFindingsUsually discussion of utility functions present them as

indifference curves between two similar goods. I prefer to think of utility functions as part of firm's production function, in the sense that there's more money to be made with an optimal expenditure on different items. But in the case of an actual business strategy, it makes sense to begin with the understanding that customers can spend money on

- high-end software (z)

- low-end software (y)

- everything else (x).

Usually I use the

x-axis to represent "everything else" (

example). Textbook writers, sometimes in an effort at humor, will select two very similar items (pizza versus hamburgers) , but assume consumers' expenditures on the two items together will remain the same regardless. I remain curious, though, as to what would happen if you're looking at a market for two similar items, in which most income will be spent on neither. If the price for one goes down, demand for the other may not necessarily go down (as it would if there were only two items).

Another deviation from usual practice is to use the

linear expenditure function instead of a Cobb-Douglas function. The Cobb-Douglass utility function is unappealing to me because, while it's easy to use mathematically, it results in a fixed share of income being spent on each good. Logically, if the price of a thing is sharply reduced, you would expect people to spend a larger share of their income on that thing; spending the same amount of money as before now yields more satisfaction, so people will find more occasions to use spend more money on it, not merely buy more units. For some products, the opposite may be true (health care), in which case the threshold level of consumption can be made negative.

The

threshold level of consumption is a phrase I made up to refer to what

x0,

yo, and

zo represent: a minimum level of consumption of these respective goods. Consumption of

x < x0 means that

x ties up income but contributes nothing to utility. As is often the case, extreme conditions are seldom relevant: we aren't usually interested in situations where

x < x0. Instead, we're interested in situations where

x >> x0, and we're making a modest shift in position. Technically, a negative threshold level of consumption implies that even negative consumption of a thing contributes to utility, to say nothing of no consumption at all. That's absurd. On the other hand, the curve created by a negative threshold may realistically describe conditions in which an increase in prices leads to an increase in total expenditures.

I set up the equation so that threshold levels of consumption were positive for all goods; the price of "everything else" was fixed; high-end software yielded a higher utility per unit, and software generally had a higher utility per unit than "everything else." I found that increasing prices for

y actually reduced spending (demand) for

z, albeit much more slowly than reducing the price for

z.

A lot of this has to do with the coefficients of the utility function:

α,

β,

γ,

x0,

yo,

zo, and

I. The values of

α,

β, and

γ determine the

gradient of the utility function at

I. When creating the graph above, I chose values for

β and

γ that were much higher than

α; that reflects an assumption that ongoing expenditures on low-end software (not to mention high-end software) provide more bang for the buck than money spent on "everything else." That's an intensely controversial proposition, but I doubt it would face controversy at Microsoft.

The values for threshold spending (

x0,

yo, and

zo) are naturally a mystery; high values for

x0 and

yo (

i.e., both "everything else" and low-end software) lower

z*, while high values for

zo increase

z*. All this means is that, if thresholds are high, a price reduction causes expenditures on the good to increase. If threshold = 0, then a price reduction causes expenditures to stay the same. For computers generally, there is strong historic evidence that falling prices have sharply increased expenditures, leading to the conclusion that the threshold value is large but is offset by a high coefficient of utility.

The effect of the original business scheme of Microsoft would lead to a shift in software expenditure from low-end software to high-end, and stimulate spending on software generally. The logic of this is intuitive: access to high-end functionality would be on tap, but users would not have to actually commit to owning the whole package. This would increase the overall utility of software per se.

Labels: client/server, economics, efficiency, microprocessors, Microsoft Windows, MS Windows